Video restoration has evolved from a niche post-production task into a central pillar of modern media enhancement, archival preservation, and AI-powered content generation. Unlike single-image restoration, video restoration must address not only spatial quality but also the complex dimension of time. Flicker, jitter, ghosting, and inconsistent textures can easily break immersion. The core challenge is temporal consistency—ensuring that enhancements applied frame by frame remain coherent across motion and scene changes. As techniques have progressed from classical optical flow to deep spatiotemporal neural networks, the industry has redefined how coherence in motion is achieved.

TLDR: Video restoration must maintain visual consistency across time, not just within individual frames. Early solutions relied heavily on optical flow to track motion and propagate corrections, but they struggled with occlusions and complex dynamics. Modern spatiotemporal AI models learn motion and context jointly, dramatically improving stability and realism. However, maintaining temporal consistency remains one of the most demanding challenges in video AI.

At its core, video restoration includes tasks such as denoising, deblurring, super-resolution, colorization, and artifact removal. While image-based methods can perform these tasks frame by frame, they often fail when applied directly to video. Enhancing each frame independently may produce excellent isolated results but result in subtle fluctuations between consecutive frames. These fluctuations, although small, accumulate into visible flicker that disrupts the viewing experience.

Why Temporal Consistency Matters

Temporal consistency refers to maintaining stable textures, colors, and structures over time. Human perception is highly sensitive to temporal inconsistencies. Even slight brightness shifts or texture variations across frames can create unnatural shimmering effects. When restoring old films or enhancing low-quality footage, these artifacts can undermine the entire enhancement process.

Key problems caused by poor temporal consistency include:

- Flickering: Frame-to-frame brightness or texture variation.

- Temporal ghosting: Residual trails caused by inaccurate motion compensation.

- Jittering details: Edges and fine patterns that appear unstable.

- Identity drift: Faces or objects subtly changing appearance across frames.

These issues reveal why video restoration cannot simply rely on powerful spatial models. It must incorporate motion understanding and temporal integration.

The Optical Flow Era

Before the rise of deep learning, optical flow was the dominant technique for enforcing temporal consistency. Optical flow estimates the motion of pixels between consecutive frames. By mapping how each pixel moves over time, restoration algorithms can align neighboring frames before applying enhancement.

In practice, a degraded video frame would be enhanced using information from adjacent frames warped according to estimated motion fields. This approach allowed algorithms to accumulate details from multiple frames while maintaining structural alignment.

Optical flow-based pipelines typically followed these steps:

- Estimate motion between neighboring frames.

- Warp adjacent frames to align with the target frame.

- Fuse aligned frames to reconstruct high-quality details.

- Apply spatial restoration algorithms.

This approach was particularly effective in multi-frame super-resolution. By combining slightly shifted details across frames, systems could reconstruct sharper imagery than single-frame solutions.

However, optical flow had significant limitations:

- Occlusions: Newly revealed or hidden regions often produced artifacts.

- Fast motion: Rapid movement reduced motion estimation accuracy.

- Motion blur: Blurred pixels confused correspondence estimation.

- Computational cost: High-quality flow estimation demanded intensive computation.

Errors in motion estimation propagated into restoration errors. A single inaccurate flow vector could produce visible ghosting or double edges. As video complexity increased—crowded scenes, dynamic lighting, non-rigid motion—the fragility of optical flow became apparent.

The Shift Toward Deep Learning

The deep learning revolution introduced convolutional neural networks (CNNs) capable of learning complex spatial patterns. Initially, video restoration models still processed frames individually, relying on implicit temporal smoothing. But researchers quickly recognized that ignoring explicit motion modeling led to instability.

The next generation of methods combined optical flow with neural networks. Flow-guided networks learned to refine alignment internally while enhancing spatial resolution. Although more robust than classical pipelines, these models still inherited the weaknesses of explicit flow dependence.

To address this, researchers began integrating motion estimation directly into neural architectures. Instead of treating flow as an external precomputed signal, deep models learned both motion and restoration jointly in an end-to-end fashion.

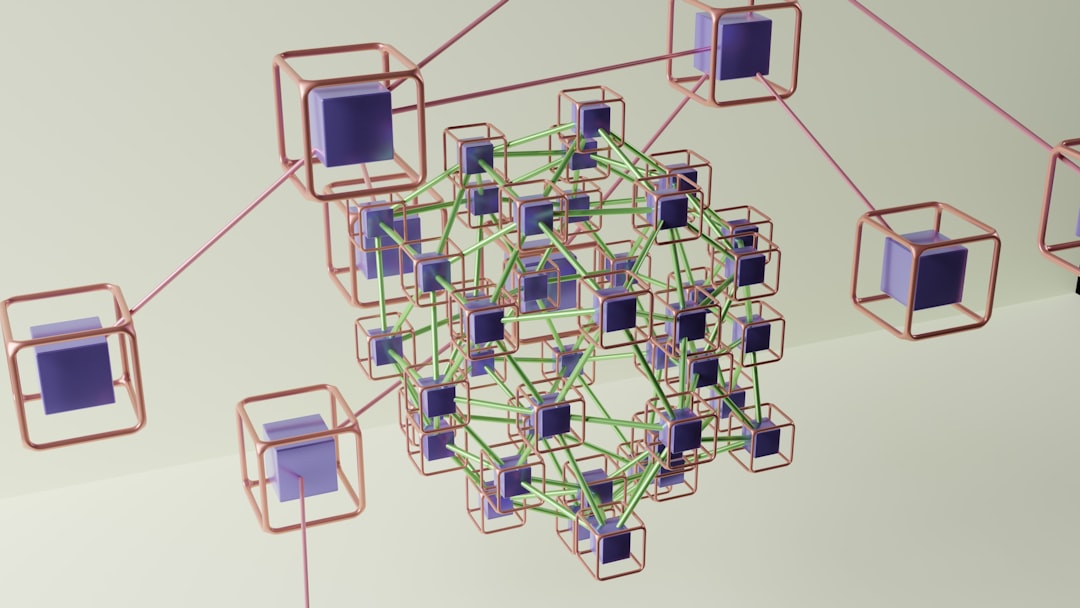

Spatiotemporal Architectures: Learning Motion and Context Together

Spatiotemporal AI models represent a turning point in video restoration. Rather than processing each frame in isolation, these systems operate on sequences, analyzing spatial and temporal dimensions simultaneously.

Key innovations include:

- 3D Convolutions: Extending kernels into the time dimension to capture motion patterns.

- Recurrent Networks: Propagating features forward and backward through time.

- Transformers: Using attention mechanisms to model long-range temporal dependencies.

- Deformable alignment layers: Learning flexible motion alignment without explicit flow fields.

These models reduce reliance on precise optical flow by allowing the network to discover motion representations that best serve the restoration objective. Instead of calculating pixel trajectories explicitly, spatiotemporal networks encode motion implicitly within feature maps.

This shift brought major advantages:

- Improved robustness: Better handling of occlusions and complex motion.

- Global temporal awareness: Ability to consider distant frames.

- Feature-level consistency: Stable high-level representations over time.

- Reduced flicker: Learned temporal smoothing within latent space.

One notable advancement is bidirectional feature propagation. By passing information both forward and backward across frames, models achieve better contextual understanding. This mimics how humans interpret motion—not merely sequentially but holistically.

Temporal Loss Functions and Training Strategies

Architecture alone does not guarantee temporal consistency. Training objectives play a crucial role. Modern systems include explicit temporal loss terms that penalize inconsistency between restored consecutive frames.

Common strategies include:

- Warping loss: Comparing a restored frame with a warped version of its neighbor.

- Perceptual temporal loss: Ensuring high-level feature similarity across time.

- Adversarial temporal discriminators: Encouraging realistic motion patterns.

- Cycle consistency constraints: Enforcing stability in forward-backward propagation.

These losses guide networks toward producing smooth transitions without sacrificing sharpness. The challenge lies in balancing stability with detail. Excessive smoothing may remove flicker but also destroy fine textures.

Real-World Applications

Temporal consistency has practical importance across industries:

- Film restoration: Reviving archival footage without introducing artificial shimmer.

- Streaming enhancement: Improving compressed videos dynamically.

- Surveillance: Stabilizing noisy nighttime footage.

- Medical imaging: Restoring dynamic scans consistently over time.

- Content creation: AI upscaling for consumer video editing tools.

In film preservation, for example, frame-by-frame dust removal may unintentionally erase details inconsistently. Spatiotemporal AI ensures scratches are removed coherently while preserving authentic grain patterns.

Remaining Challenges

Despite recent progress, temporal consistency is far from solved. Several open problems persist:

- Long videos: Maintaining coherence across hundreds or thousands of frames.

- Scene transitions: Preventing temporal blending when cuts occur.

- Identity preservation: Ensuring faces remain stable without drift.

- Generalization: Handling diverse content outside training datasets.

Transformer-based models show promise in modeling long-range dependencies, but they come with high memory demands. Efficient architectures remain a research priority.

Another critical issue is the trade-off between determinism and creativity. Some generative models hallucinate plausible details during restoration. While visually pleasing, these generated elements may vary slightly between frames, introducing subtle temporal instability. Finding ways to constrain generative freedom without sacrificing realism is an ongoing research frontier.

The Future of Spatiotemporal AI

Emerging trends suggest a move toward unified video foundation models—systems trained on massive datasets capable of performing denoising, super-resolution, interpolation, and colorization within a single architecture. These models integrate spatial texture understanding with deep temporal reasoning.

Hybrid approaches are also gaining attention. Combining physics-based models with deep learning may introduce interpretability while preserving performance. Additionally, diffusion-based generative models adapted for video show remarkable detail reconstruction, though temporal stabilization mechanisms remain under active development.

The ultimate goal is to approach perceptual indistinguishability—where enhanced footage appears naturally captured rather than reconstructed. Achieving that requires not only superior spatial fidelity but seamless motion continuity.

Conclusion

The evolution from optical flow pipelines to sophisticated spatiotemporal AI reflects the growing understanding that video is fundamentally more than a sequence of images. Temporal consistency lies at the heart of realistic restoration. Early flow-based methods established the foundation by aligning motion explicitly, yet struggled in complex scenarios. Modern neural architectures now learn motion implicitly, integrating context across time with unprecedented effectiveness.

Nevertheless, maintaining stable and coherent outputs across extended sequences remains a demanding challenge. As research advances toward larger models and smarter temporal constraints, video restoration continues its transformation into a deeply integrated spatiotemporal intelligence problem—where time is not just an added dimension, but a defining one.

FAQ

1. What is temporal consistency in video restoration?

Temporal consistency ensures that restored video frames remain visually stable and coherent over time, preventing flicker, jitter, and other frame-to-frame inconsistencies.

2. Why can’t image restoration methods be directly applied to video?

Image methods process frames independently and ignore motion information, which often leads to inconsistent textures and brightness variations across frames.

3. How does optical flow help with video restoration?

Optical flow estimates pixel motion between frames, allowing algorithms to align and merge information from multiple frames for better detail reconstruction.

4. What are spatiotemporal neural networks?

Spatiotemporal networks analyze both spatial features and temporal relationships simultaneously, learning motion representations directly within the model.

5. Are modern AI models free from temporal artifacts?

No. While significantly improved, even advanced spatiotemporal models can struggle with long sequences, rapid motion, or scene transitions.

6. What is the future direction of video restoration technology?

Research is moving toward large-scale foundation models, transformer-based architectures, and diffusion-based systems that integrate stronger temporal reasoning and improved efficiency.